Haptic feedback across iPad and iPhone

Table of contents

Haptic feedback enhances the tactile experience for users by providing physical sensations in response to interactions. This can be used alongside visual feedback to create more polished apps. In this post, we’ll explore implementing haptic feedback so it works across both iPhones and iPads — which requires going against Apple’s recommendations.

Apple’s Taptic Engine(opens in a new tab) for haptic feedback has been built into all iPhones since 2015. iPads don’t have this hardware in the device itself, but since 2024, haptic feedback has been available through the Apple Pencil Pro and the trackpad on the Magic Keyboard for iPad Pro (M4). The built-in trackpads on MacBooks and the Bluetooth Apple Magic Trackpad also have haptic feedback hardware.

Our PDF SDK has supported haptic feedback on iPhones when resizing annotations since version 6 in 2016. When an annotation is close to its original aspect ratio or a square aspect ratio, we snap to exactly that aspect ratio, show a dashed line as visual feedback, and use a UIImpactFeedbackGenerator(opens in a new tab) to play haptic feedback.

We wanted to add similar haptic feedback on iPads to support the Apple Pencil Pro and the trackpad on the Magic Keyboard for iPad Pro (M4).

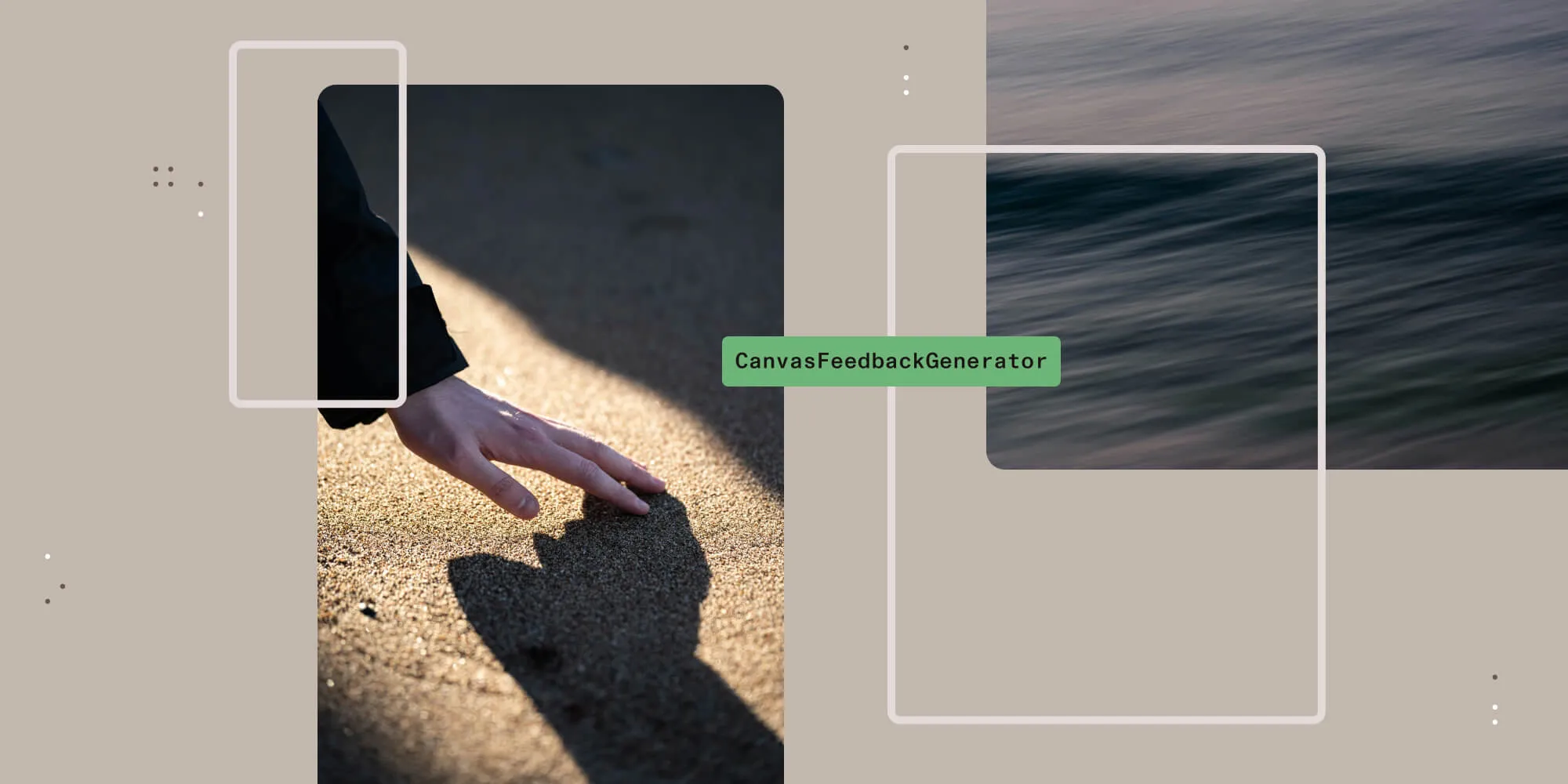

Canvas feedback generator

iOS 17.5 added UICanvasFeedbackGenerator(opens in a new tab). The documentation for this class says:

Use canvas feedback to indicate when a drawing event occurs, such as an object snapping to a guide or ruler.

This sounds perfect for what we’re building, so I thought we could simply change our UIImpactFeedbackGenerator(opens in a new tab) to a UICanvasFeedbackGenerator(opens in a new tab), and then we’d have haptics on the Apple Pencil Pro and trackpad. This was true, but haptic feedback no longer occurred on iPhones. In other words:

UICanvasFeedbackGeneratoronly works with the Pencil and Magic Keyboard on iPads, not on iPhones.UIImpactFeedbackGeneratoronly works on iPhones. You get no feedback from the Pencil or Magic Keyboard with iPads.

Trust or test?

Further research led me to Apple’s Playing haptic feedback in your app(opens in a new tab) article, which says:

Not all types of haptic feedback play on every type of device. As a general rule, trust the system to determine whether it should play feedback. Don’t check the device type or app state to conditionally trigger feedback.

This is troublingly vague, and because I couldn’t find any documentation specifying exactly which feedback generators were expected to play haptic feedback in which situations, I made a test project to find out by experimentation. Here’s what I found (last tested with Xcode 16.2 and iOS 18.2):

| Generator | iPhone | Pencil | Trackpad drag | Trackpad scroll |

|---|---|---|---|---|

| Canvas alignment | ❌ | ✅ | ✅ | ✅ |

| Canvas path completed | ❌ | ✅ | ❌ | ✅ |

| Impact | ✅ | ❌ | ❌ | ✅ |

| Selection | ✅ | ❌ | ✅ | ✅ |

| Notification | ✅ | ❌ | ❌ | ❌ |

Notes:

- A ✅ means feedback occurred.

- Pencil refers to the Apple Pencil Pro on iPad Pro (M4).

- Trackpad refers to the Magic Keyboard for iPad Pro (M4).

- Trackpad scroll involves two fingers without clicking, detected using

UIPanGestureRecognizer.allowedScrollTypesMask.

In my tests, these configurations never generated feedback, although this could be due to issues with the sequence of events in the test app:

- Apple Pencil Pro hover

- Trackpad hover on the Magic Keyboard for iPad Pro (M4)

- Any interaction on a Bluetooth Apple Magic Trackpad connected to an iPad

- Any interaction on any trackpad when the app ran on a Mac using Mac Catalyst

While the documentation says “Not all types of haptic feedback play on every type of device”, it’s more accurate to say no type of haptic feedback plays on every type of device.

This split creates a frustrating gap: You can’t rely on a single API to deliver haptics across devices. And Apple’s documentation is maddeningly vague about when feedback will actually fire. I can’t say our QA team will be too pleased if the answer to “what’s the expected behaviour?” is “just trust Apple”.

It seems like we’re not supposed to have haptic feedback on iPhones when snapping while resizing shapes. However, our own judgment says that this feedback is nice, and it would be a regression to take away this feature that users have had for years on their iPhones. We decided we want feedback across both iPhones and iPads.

Solution: Device checks (sorry, Apple)

Since Apple’s advice to “trust the system” doesn’t hold up, we took matters into our own hands and used device checks to use UICanvasFeedbackGenerator on iPads and UIImpactFeedbackGenerator on other platforms (although this is only known to do anything on iPhones).

We created a protocol to abstract the API:

@MainActor private protocol CanvasFeedbackGenerating { func prepare() func alignmentOccurred(at location: CGPoint)}This protocol matches the API of UICanvasFeedbackGenerator, so we can conform to this protocol without additional implementations:

extension UICanvasFeedbackGenerator: CanvasFeedbackGenerating { }The prepare() method already matches UIImpactFeedbackGenerator, so our protocol conformance just needs to add the other method:

extension UIImpactFeedbackGenerator: CanvasFeedbackGenerating { func alignmentOccurred(at location: CGPoint) { impactOccurred(at: location) }}We use a wrapper type, CanvasFeedbackGenerator, which has the same API as UICanvasFeedbackGenerator but internally handles the device checks and forwards to a private object conforming to CanvasFeedbackGenerating:

@MainActor final class CanvasFeedbackGenerator { private let implementation: any CanvasFeedbackGenerating

init(view: UIView) { switch UIDevice.current.userInterfaceIdiom { case .pad: implementation = UICanvasFeedbackGenerator(view: view) case .unspecified, .phone, .tv, .carPlay, .mac, .vision: fallthrough @unknown default: implementation = UIImpactFeedbackGenerator(style: .light, view: view) } }

func prepare() { implementation.prepare() }

func alignmentOccurred(at location: CGPoint) { implementation.alignmentOccurred(at: location) }}The code above is simplified to assume it compiles only for iOS and runs on iOS 17.5 or later.

In practice, our SDK compiles for iOS, Mac Catalyst, and visionOS. UIFeedbackGenerator and all its subclasses are marked unavailable on visionOS and tvOS. (Given these APIs don’t always do something when they are available, it’s inconvenient that we also have to deal with API unavailability on some platforms; it would’ve been nice if these APIs had been made fully available but did nothing on some platforms.) We’ll make our wrapper handle this detail internally using #if os() compile-time checks.

Additionally, we support back to iOS 16, so we need #available runtime checks because some APIs are only available starting with iOS 17.5.

The full code for our cross-device canvas feedback generator is below. This should work back to iOS 10 and compile for iOS, Mac Catalyst, visionOS, and tvOS:

@MainActor final class CanvasFeedbackGenerator { private let implementation: (any CanvasFeedbackGenerating)?

init(view: UIView) {#if os(iOS) // The only known cases that generate feedback are `UICanvasFeedbackGenerator` on iPad and `UIImpactFeedbackGenerator` on iPhone. // Neither of these seem to do anything on Mac (checked with macOS 15.1.1). if #available(iOS 17.5, *) { switch UIDevice.current.userInterfaceIdiom { case .pad: implementation = UICanvasFeedbackGenerator(view: view) case .unspecified, .phone, .tv, .carPlay, .mac, .vision: fallthrough @unknown default: implementation = UIImpactFeedbackGenerator(style: .light, view: view) } } else { implementation = UIImpactFeedbackGenerator(style: .light) }#else implementation = nil#endif }

func prepare() { implementation?.prepare() }

func alignmentOccurred(at location: CGPoint) { implementation?.alignmentOccurred(at: location) }}

@MainActor private protocol CanvasFeedbackGenerating { func prepare() func alignmentOccurred(at location: CGPoint)}

#if os(iOS)@available(iOS 17.5, *)extension UICanvasFeedbackGenerator: CanvasFeedbackGenerating { }

extension UIImpactFeedbackGenerator: CanvasFeedbackGenerating { func alignmentOccurred(at location: CGPoint) { if #available(iOS 17.5, *) { impactOccurred(at: location) } else { impactOccurred() } }}#endifClosing thoughts

With that, we have haptic feedback when snapping occurs while resizing annotations, both on iPhones and on iPads when using the Apple Pencil Pro or the trackpad on the Magic Keyboard for iPad Pro (M4). We shipped this in Nutrient iOS SDK 14.4 and PDF Viewer(opens in a new tab) 2025.1.

As Apple’s frameworks expand across platforms, we’re left to fill in the gaps where consistency falls short. Adding device-specific checks isn’t ideal, but it seems pragmatic and gives users the best tactile experience, whether they’re on an iPhone or iPad.

Encouraging developers to trust the system is a nice idea, but professional engineering should be based on clear documentation rather than vague trust, especially for QA. It would be easy to miss a bug in your own code if you trusted that haptic feedback might play on another device, just not the one you’re using for testing.