Automating Test Retries

Table of contents

To evolve and grow a complex codebase with confidence, having a large test suite is important. When coupled with an automated CI system that runs the test suite after every code change, it lets developers iterate swiftly without having to worry about regressions.

But having a large test suite comes with its own challenges, such as:

- Maintaining reliability of old tests as the codebase changes

- Dealing with flaky tests

- Reproducing “But it works on my machine!” test failures that fail on CI

- Making sure that tests complete in a reasonable amount of time

We’ve talked about the testing and CI processes and infrastructure at PSPDFKit in several of our earlier blog posts. In this one, we’ll share a story of how our iOS team dealt with flaky tests by rerunning them automatically.

Our Setup

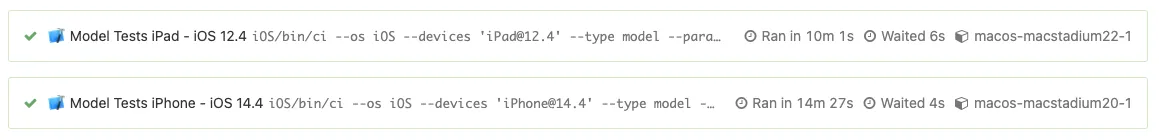

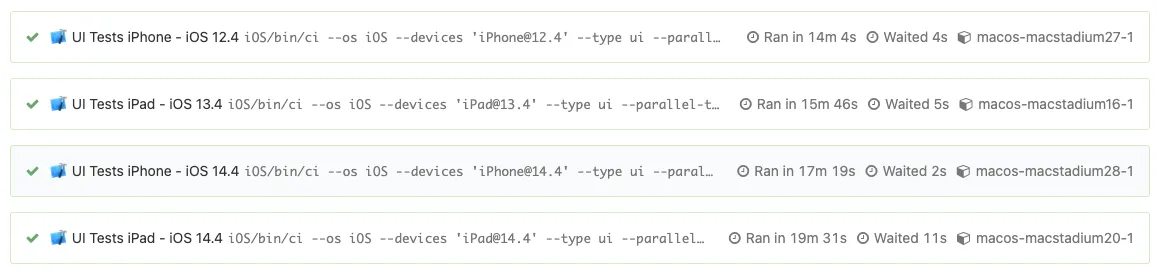

All of our tests are separated into two categories, and they run automatically on our CI system (Buildkite) after a PR is made for multiple iOS version and device combinations. These two types of tests are:

- Unit tests — These are usually small and isolated tests to verify models, parsers, or other code that transforms data. Due to their isolated nature and simplicity, these tests are quite reliable and fast.

- UI tests — UI tests are helpful for validating UI flows and are much closer to what would happen in real life as a user interacts with our products. These tests cover a lot of code, but they’re slow, fragile, and prone to flakiness.

The Problem

In the past, we had a lot of problems with flaky tests on CI — especially UI tests which would randomly fail on seemingly unrelated code changes. There were several underlying causes(opens in a new tab) for this flakiness, including:

- Unreliable system interactions

- Complicated UI interactions and timing issues

- Threading issues

- Dependence on test ordering

- Tests depending on or polluting global state

- Host machine performance characteristics and current load

Trying to pin down the actual reason for these failures proved to be both difficult and time consuming, as they weren’t easy to reproduce locally with the debugger attached. The xcresult bundle artifact and logs from CI did shed some light on possible reasons for failure; however, it wasn’t clear what would fix the flakiness.

Before any PR can be merged to the master branch, we have a prerequisite that the test suite must be green. ✅ But sometimes, due to flakiness, a test suite would fail, requiring developers to manually retry the CI pipeline in the hope that the next run would pass. These retries took around 30 minutes each to finish — it was a source of frustration for developers, and it delayed the PR review cycle.

What We Tried...

To combat flaky tests, the first thing you need to do is to track them. So, we did that: For a few weeks, any test that randomly failed was temporarily disabled and added to a tracking list. We did this to unblock developers and bring PR review times back to normal.

Once we had a list of the flaky tests, we tried to go through each one and determine why they were failing. We found that some UI elements such as menus and popovers were particularly prone to flakiness — they would sometimes be dismissed by the system for no discernable reason! To fix this, we added some EarlGrey(opens in a new tab) helper methods, which would retry the specific UI interaction multiple times before considering it failed. These helpers were rather effective at fixing this particular class of flakiness. However, there were other types of flakiness related to threading issues and test ordering that turned out to be more difficult to fix.

The final result of our efforts thus far was pretty meh. 😒 Some tests got fixed, but not all of them — so our initial problem remained. So instead of spending more time going down the flakiness rabbit hole, we decided to try something else: Accepting test flakiness as a part of life and RETRYING AUTOMATICALLY!

Automatic Test Retries

For parsing and reporting test results, our CI uses trainer(opens in a new tab) to convert Xcode test runs to JUnit. We then use the generated JUnit file to report test failures to Buildkite using the annotate(opens in a new tab) command.

For an initial proof of concept, we updated the CI pipeline so that it’d rerun the entire test suite in the case of a test failure. This meant that developers no longer needed to manually rerun the pipeline when these failures occurred. However, running the entire test suite again was expensive in terms of time, and it would overload the CI if there were a lot of pending PRs.

Since we already had the JUnit parsing code, we decided to build on top of that and rerun only the failed tests. By using the xcodebuild command’s -only-testing flag, we ran only the failed tests again. Another optimization we made was to build the project only once, even when testing multiple times. We accomplished that by using the xcodebuild build-for-testing and xcodebuild test-without-building commands. Here’s how we build for testing:

xcodebuild -workspace "PSPDFKit.xcworkspace" -scheme PSPDFKitUI-ConsolidatedTests-iOS -configuration Testing -sdk iphonesimulator -derivedDataPath "PSPDFKit/iOS/build-tests" -parallelizeTargets build-for-testingAnd here’s how we test it:

xcodebuild -workspace "PSPDFKit.xcworkspace" -scheme PSPDFKitUI-ConsolidatedTests-iOS -configuration Testing -destination "platform=iOS Simulator,id=ABC" -sdk iphonesimulator -derivedDataPath "PSPDFKit/iOS/build-tests" -parallelizeTargets -parallel-testing-enabled YES -parallel-testing-worker-count 3 test-without-buildingFor retrying only one specific test, the command looks something like this:

xcodebuild -workspace "PSPDFKit.xcworkspace" -scheme PSPDFKitUI-ConsolidatedTests-iOS -configuration Testing -destination "platform=iOS Simulator,id=ABC" -only-testing:PSPDFKitUI-EarlGreyTests/AnnotationStylesUITests/testSquareAnnotationBorderStyles -sdk iphonesimulator -derivedDataPath "PSPDFKit/PSPDFKit/iOS/build-tests" -parallelizeTargets -parallel-testing-enabled YES -parallel-testing-worker-count 3 test-without-buildingResults

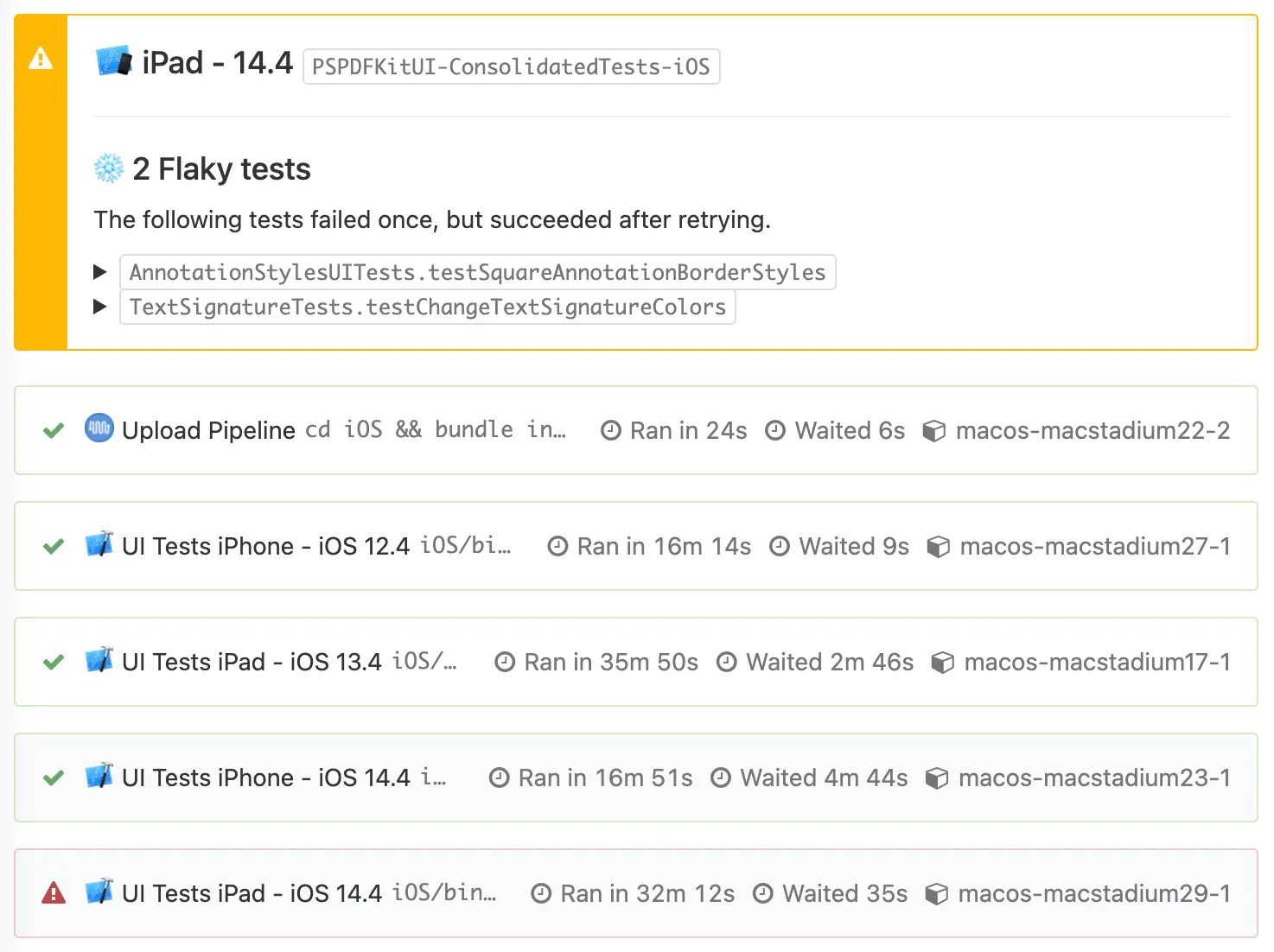

The results of automatic test retries were promising. Flaky tests still exist, but they no longer slow down the workflow of our developers. CI automatically retries any failing tests, and almost all flaky tests pass when run again. If a test actually fails three times in a row, only then it is considered an actual failure and the build is marked as failed.

We also report any flaky tests to CI so that they’re brought to our attention. The report looks something like this:

ℹ️ Note: It’s important to point out that this approach of retrying tests might hide actual issues in our codebase, such as race conditions in the SDK. Our plan to combat this is to gather statistics about retried tests and periodically investigate the most problematic ones. Right now, we feel that this is a tradeoff worth making to increase development productivity.

Retrying Tests with Xcode 13

At WWDC 21, with Xcode 13, Apple introduced test repetitions, which allow developers to define a number of test runs and a stopping condition. So, for example, we could decide to repeat a test 100 times but stop it if the test passes. Here’s what Apple has to say about it in the release notes:

When using

xcodebuild, pass-test-iterationswith a number to run a test a fixed number of times, or combine it with-retry-tests-on-failureor-run-tests-until-failureto use one of the other stopping conditions. For example, to run your test with repetition from the command line, start with the basexcodebuildcommand to run your test, and add the flags-test-iterationsset to 100 and-run-tests-until-failure:

xcodebuild test -project MyProject.xcodeproj -scheme MyProject -test-iterations 100 -retry-tests-on-failureThis would’ve been super helpful to us if only it had been released a few months ago. 🥲 To dig into this deeper, check out this fantastic WWDC video on how to diagnose unreliable code with test repetitions(opens in a new tab).

Conclusion

Tracking down flaky tests is a challenging task. Sometimes, you can get stuck in a brute-force approach of trying to fix them, but a simple solution like automating test retries can work wonders. It’s important to maintain a reliable test suite, as otherwise it creates more problems than it solves. I hope that our story of implementing automatic test retries helps guide you on your journey toward more stable tests! Good luck! 🍀