Embedding Audio in PDFs: Sound Annotations in Depth

Table of contents

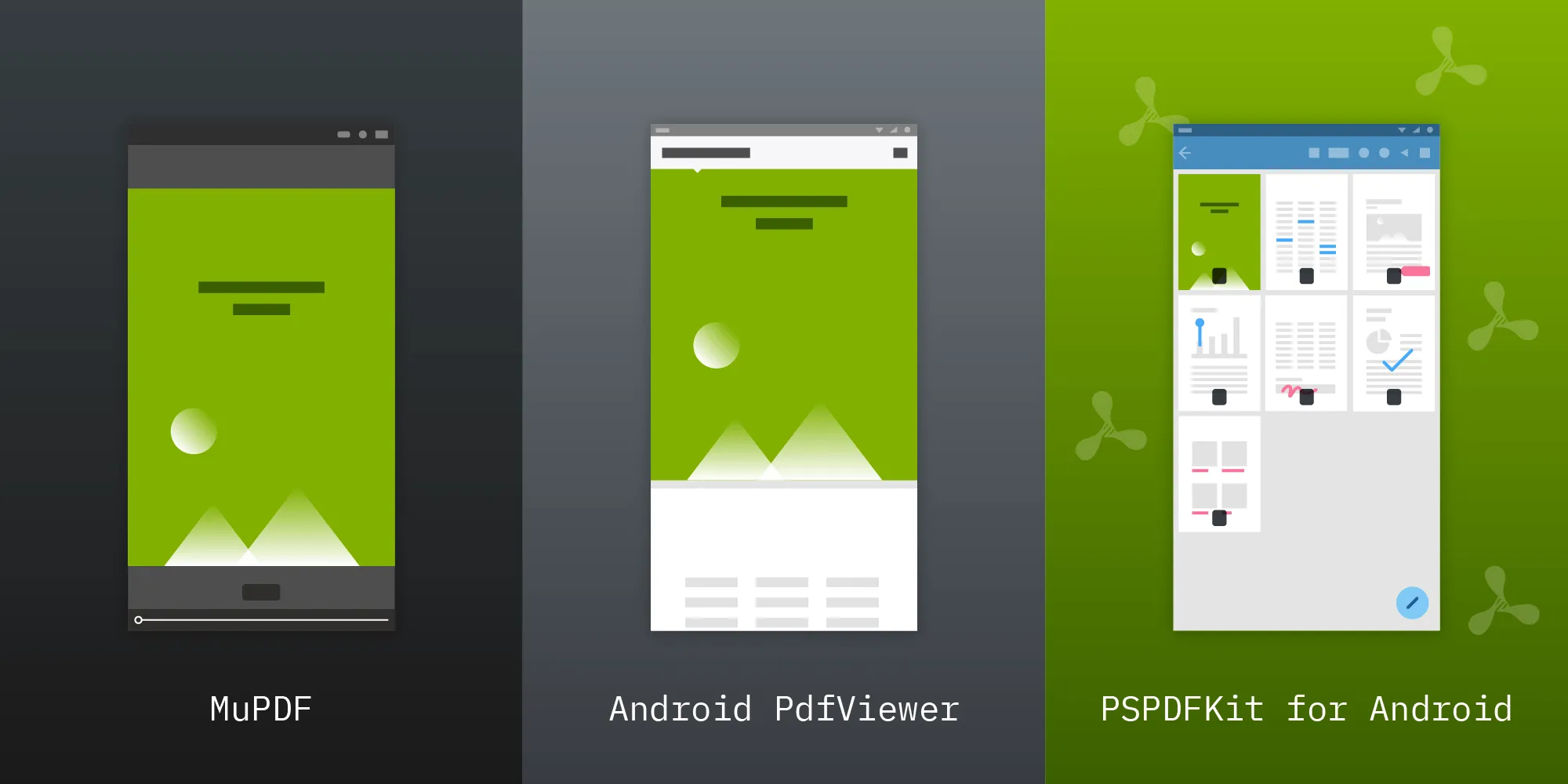

With sound annotations, it’s possible to add audio files directly to a document so that they’re stored inside a PDF file. PSPDFKit for Android and PSPDFKit for iOS have full support for playback and recording of sound annotations. This post explains what sound annotations are and how they’re represented inside PDF files. It continues by outlining how PSPDFKit for Android works with sound annotations.

A sound annotation is similar to a standard text note annotation, with the difference that, instead of text contents, it contains sound recorded from a device’s microphone or imported from an audio file. The sound can be played in PDF viewers after selecting the sound annotation.

Sound annotations are displayed as icons on a page, just like note annotations. However, a sound annotation icon is usually a speaker (default) or microphone — see the image below — to indicate that the annotation contains an audio note.

Storing Audio in PDF

Sounds we hear are caused by propagation of acoustic waves in air. These waves can be represented by graphing the variations of air pressure over time as a waveform. Storing this waveform in digital media is done by sampling the waveform value periodically. Each audio sample is an integer value that is directly read from a physical sensor — usually a microphone. This method of converting analog signal (i.e. sound) to a digital format is referred to as pulse-code modulation(opens in a new tab) (PCM).

A PCM audio stream has two basic characteristics:

- A sampling rate, which represents the number of samples stored per second. Values of 44.1 kHz are typical for music (historically used as a sampling rate of audio CDs). A sampling rate in the range of 8–22 kHz is considered acceptable for speech recording.

- The bit depth is the number of bits used to represent each sample value. 16 bits is the most widely used bit depth.

Audio data is encapsulated as a sound object attached to a sound annotation inside a PDF file. A sound object encapsulates an audio stream itself, along with all the metadata that specifies its format and characteristics. Examples of this metadata include the sampling rate, bit depth, number of channels (one for mono or two for stereo), and sample encoding.

Audio samples can be encoded in various formats. For example, these sample encodings are defined in the PDF specification:

- Raw — Unsigned values in a range of

0to2<sup>B</sup>-1, where B is the bit depth. - Signed — Two’s complement(opens in a new tab) signed values in a range of

-(2<sup>B-1</sup>)to2<sup>B-1</sup>-1, where B is the bit depth. - mu-Law or A-Law — μ-law(opens in a new tab) or A-law(opens in a new tab) encoded samples that were traditionally used in telephony applications.

Audio samples are expected to be stored in a big-endian format. In addition, multiple channels are expected to be stored in an interleaved format, i.e. when using stereo recording, each sample value for the left channel should be stored together with the corresponding sample for the right channel.

These rather strict requirements don’t map directly to the format used by Android’s media APIs. But don’t worry; in the next section, I’ll present a high-level API that simplifies conversion to this PDF audio format when using PSPDFKit.

PSPDFKit API

PSPDFKit for Android supports both the playback of existing sound annotations and the recording of new annotations using a device’s microphone. This includes full UI support that’s shipped as part of the PdfActivity.

Sound annotations on a page are represented by the SoundAnnotation class. Its model exposes the sound data stored inside a PDF and allows creation of new sound annotations programmatically by importing an existing audio file.

To create a new sound annotation, the SoundAnnotation constructor expects EmbeddedAudioSource with raw audio data in the PDF format. As indicated above, we identified that don’t want to force end users of our API to handle this conversion themselves. This is the reason we provide an AudioExtractor API, which extracts audio tracks from media files and automatically converts them to the required format:

// The audio decoder supports decoding audio tracks from all media formats supported by `MediaExtractor`.// In this example, we're extracting a media file from an application's assets.val audioExtractor = AudioExtractor(context, Uri.parse("file:///android_asset/media-file.mp3"))

// If there's more than one audio track, you should use this// call to select which track to extract.// The first audio track is selected by default.// audioExtractor.selectAudioTrack(0);

// Extract the audio track asynchronously.audioExtractor.extractAudioTrackAsync().subscribe { embeddedAudioSource-> // Create a new sound annotation from the extracted audio data. val soundAnnotation = SoundAnnotation( // Page index 0. 0, // Page rectangle (in PDF coordinates). RectF(...), // Embedded audio source returned by the audio extractor. embeddedAudioSource)

// Add the created annotation to the page. document.getAnnotationProvider().addAnnotationToPage(soundAnnotation)}AudioExtractor uses MediaExtractor(opens in a new tab) from the Android SDK. This means it supports all media formats(opens in a new tab) that are supported by the actual device. Widely used audio formats such as MP3 or AAC are usually supported. It’s also possible to extract audio tracks from supported video formats.

The Android audio subsystem works with audio samples encoded with the “Signed” encoding, which is also the most widely used format used by third-party PDF tools when embedding sound annotations. PSPDFKit for Android supports playback of this most commonly used encoding too. The supported sample rates and bit depth depend on the target device. Android guarantees that the 16-bit depth is supported on all devices (see AudioFormat#ENCODING_PCM_16BIT(opens in a new tab)). This is why we decided to use 16 bits for sound recording and audio extraction via AudioExtractor: for maximum compatibility with the Android multimedia framework.

Audio Playback

Now we know how sound annotations are stored inside PDF files, how PSPDFKit’s public API models these annotations, and how the AudioExtractor API simplifies creation of sound annotations from arbitrary media files. Now it’s time to dig a bit deeper and explain how PSPDFKit for Android plays back sound annotations.

The Android SDK ships with high-level MediaPlayer(opens in a new tab) APIs that provide a convenient way of playing audio or video files. This poses an initial challenge for us: Sound annotation audio data isn’t in a compatible format. We solved this problem by introducing the immediate step of converting raw audio data to the WAVE(opens in a new tab) file format.

Converting an Audio Stream to the WAVE File Format

Let’s define a simple model for our WavWriter:

class WavWriter( /** Sound data in (linear) PCM. */ private val audioData: ByteArray, /** Sample rate in samples per second. */ private val sampleRate: Int, /** Bits per sample. */ private val sampleSize: Int, /** Number of sound channels. */ private val channels: Int, /** Byte order of samples inside `audioData`. Used only when `sampleSize` is larger than 8. */ private val audioDataByteOrder: ByteOrder = ByteOrder.BIG_ENDIAN) {

...}Here’s a method that produces a WAVE file header. This header is important for enabling supported players to be able to read all important metadata required to play back the audio stream:

private fun getWaveHeader(): ByteBuffer { val header = ByteBuffer.allocate(RIFF_HEADER_SIZE + WAVE_HEADER_SIZE) header.order(ByteOrder.LITTLE_ENDIAN)

// RIFF file header. header.put("RIFF".toByteArray()) // Final file size except 8 bytes for RIFF header and this file size field. header.putInt(WAVE_HEADER_SIZE + audioData.size)

// Wave format chunk. header.put("WAVE".toByteArray()) header.put("fmt ".toByteArray()) // Sub-chunk size, 16 for PCM. header.putInt(16) // AudioFormat, 1 for PCM. header.putShort(1.toShort()) // Number of channels, 1 for mono, 2 for stereo etc. header.putShort(channels.toShort()) // Sample rate. header.putInt(sampleRate) // Byte rate. header.putInt(sampleRate * sampleSize * channels / 8) // Bytes per sample `NumberOfChannels * BitsPerSample / 8`. header.putShort((channels * sampleSize / 8).toShort()) // Bits per sample. header.putShort(sampleSize.toShort())

// Data chunk. header.put("data".toByteArray()) // Data chunk size. header.putInt(audioData.size)

return header}We’ll use a single generic method for converting the audio data to OutputStream. The most interesting part of its implementation is the conversion from big-endian audio data stored in the PDF file to little-endian audio data used by the WAVE format:

/** Size of the buffer used when writing the wav file. */private const val BUFFER_SIZE = 2048

fun writeToStream(outputStream: OutputStream) { // Write the WAVE header. outputStream.write(getWaveHeader(audioData, sampleRate, sampleSize, channels).array())

// Write the audio data. if (sampleSize > 8 && audioDataByteOrder == ByteOrder.BIG_ENDIAN) { // Reverse byte order for big-endian audio clips with a sample size larger than 1 byte (WAVE files are little endian). val buffer = ByteArray(BUFFER_SIZE) var bufferIndex = 0

var audioDataIndex = 0 while (audioDataIndex < audioData.size - 1) { if (bufferIndex == BUFFER_SIZE) { outputStream.write(buffer) bufferIndex = 0 }

val msb = audioData[audioDataIndex] val lsb = audioData[audioDataIndex + 1] audioDataIndex += 2

buffer[bufferIndex] = lsb buffer[bufferIndex + 1] = msb bufferIndex += 2 } if (bufferIndex != 0) { outputStream.write(buffer, 0, bufferIndex) } } else { outputStream.write(audioData) } outputStream.close()}We’re now able to easily produce WAVE files directly from sound annotations:

fun getWaveFileUri(soundAnnotation: SoundAnnotation): Uri { // Produce a unique name for a sound annotation in the app's cache directory. val cacheDirectory = context.getCacheDir() val outputFile = File( cacheDirectory, String.format("sound_%s.wav", soundAnnotation.uuid) )

// Convert the sound annotation's data to our output file. BufferedOutputStream(FileOutputStream(outputFile)).use { outputStream -> new WavWriter( soundAnnotation.audioData, soundAnnotation.sampleRate, soundAnnotation.sampleSize, soundAnnotation.channels ).writeToStream(outputStream) }

return Uri.fromFile(outputFile)}Playing the Sound

We’ll conclude our efforts by playing back the produced WAVE file using Android’s MediaPlayer(opens in a new tab) API.

To start, we need to initialize the media player with mediaUri, which is produced by our getWaveFileUri() method:

val mediaPlayer: MediaPlayer = MediaPlayer()

// Set source of the media player's data.mediaPlayer.setDataSource(context, mediaUri)

// Set audio attributes to inform the media// system about the type of our audio stream.audioAttributes = AudioAttributes.Builder() .setLegacyStreamType(AUDIO_STREAM_TYPE) .setUsage(AudioAttributes.USAGE_MEDIA) .build()mediaPlayer.setAudioAttributes(audioAttributes)

// Prepare the media player.mediaPlayer.prepare()We can now use standard media playback operations on the mediaPlayer instance — for example, to start playback (mediaPlayer.start()), pause (mediaPlayer.pause()), or seek in the stream (mediaPlayer.seekTo(milliseconds)).

Note that this article covers only a small portion of PSPDFKit’s audio playback implementation. One of the more important issues we needed to take care of was to manage the audio focus. Multiple Android apps can play audio at the same time, and the system mixes these streams together, which is usually undesirable. Android’s media framework solves this by introducing the concept of audio focus, where each well-behaved app that wants to play sounds is expected to request an audio focus before starting playback, and to abandon the audio focus when pausing or stopping playback, and should listen to the audio focus of other apps to stop playback when another app requests focus. You can read more about this topic in the manage audio focus guide(opens in a new tab) on the Android developers site.

Conclusion

Sound annotations aren’t the most widely used annotation type, but they can still prove useful in certain applications. The leading use case of sound annotations is multimedia PDFs — be it in the education sector or simply to improve the customer experience of digital magazines. In addition, there are cases where sound notes are a better fit for reviewing documents or taking notes, as opposed to other markup annotations such as highlights, shapes, or text notes.

This blog post outlined what sound annotations are and how PSPDFKit for Android works with audio data stored in PDF files. If you wish to learn more, refer to the relevant Android(opens in a new tab) and iOS guides. You can also click here(opens in a new tab) to try out our SDK.