Unleashing the Beta Beast: Introducing the Mind-Reading Q&A Tool

Table of contents

My previous blog post chronicled my vainglorious attempt to teach OpenAI about our SDK documentation. That part of the journey ended in a proof of concept (POC) that I presented internally to the company. Since then, I’ve been working relentlessly on improving it. After even more research, trial and error, sleepless nights, and broken dreams — all of which I document in this blog post — the beta version of the documentation Q&A tool is finally available to the general public. To check it out, head to the Ask AI page. And please let me know what you think about it!

Prompt:

You’ve improved the initial proof of concept and you’re about to release the beta version of the Q&A tool. This enables users to ask questions about the company’s products, and the tool uses OpenAI technology to formulate answers based on the SDK documentation.

Write a blog post introducing the beta tool with humor and sarcasm.

ChatGPT’s response:

Introduction

Greetings, fellow developers, tech enthusiasts, and accidental time travelers who stumbled upon this blog post! Today, I’m thrilled to present the beta version of our mind-reading, code-generating, and slightly rebellious Q&A tool. Brace yourselves for an exhilarating journey through the realms of our SDK documentation, where questions are answered, mysteries are unraveled, and weirdness is served on a silver platter.

The Birth of a Legend

Picture this: a technical writer armed with nothing but a keyboard, a caffeine addiction, and a profound desire to make SDK documentation less painful. Armed with OpenAI’s magical language processing capabilities, I set out on a quest to build a tool that would make our users’ lives easier. Little did I know, I was about to unleash a beta beast that would leave me equal parts impressed and terrified.

Question Everything (Except Our Answers)

You’re probably wondering, how does this mystical Q&A tool work? Well, it’s simple! You just throw questions at it, and it responds with answers that are eerily accurate... most of the time. Our OpenAI-powered contraption has devoured our SDK documentation, digested it (weird, right?), and now stands ready to dish out tailormade answers like a vending machine that dispenses both wisdom and witty comebacks.

Beta: It’s Like Tinder, but for Bugs

As with any beta release, I fully expect some delightful quirks and unexpected surprises. Bugs may appear like uninvited party crashers, but fear not! These glitches are merely misunderstood features waiting to be tamed. I kindly ask you, dear users, to embark on this beta journey with me, embrace the weirdness, and report any oddities you encounter. Together, we shall make this Q&A tool the hero it was destined to be!

Conclusion

In the spirit of adventure, I invite you to dive headfirst into the captivating world of our beta Q&A tool. Unearth answers to your burning questions, witness the occasional philosophical debate with AI, and above all, enjoy the journey. Remember, we’re here to transform SDK documentation from a dull chore into an exhilarating experience. Get ready to unlock a new level of productivity and humor!

P.S. Don’t worry, the Q&A tool won’t predict your future or spoil the next season of your favorite TV show. I’m still working on that upgrade!

Yes, when it comes to humor and creativity, I still can’t rival OpenAI. But that’s OK. I’m a tech writer, I remind myself. It’s my job to be boring and to the point. (Wiping away an invisible tear deep inside.)

So here’s a sterilized, pasteurized, no-fluff, and no-filter list of changes I’ve made to the proof of concept since the last blog post, all of which have enabled the publication of the beta today:

- As promised, I’ve improved the prompt generation mechanism. Previously, the retrieval plugin simply chopped up text based on the maximum number of tokens per chunk, so I tweaked this to be a little bit smarter. The current logic splits larger bodies of text into meaningful semantic chunks, such as page sections and code blocks. This improved the context provided to the OpenAI, and thus the answers. The OpenAI API was previously fed a hodgepodge diet of morsels of code snippets jumbled with fragments of marketing text, which it understandably didn’t enjoy.

- Answers are now streamed from OpenAI in fragments, which gives a more ChatGPT-y experience. This is based on Lukas Wimhofer’s tutorial about building a Next.js streaming app(opens in a new tab). In contrast, the POC waited for a wall of text before displaying the entire answer to the user. OpenAI servers seemed to be increasingly in demand, which meant that the full answer sometimes took a minute to arrive. As we all know, “ain’t nobody got time for that.” So the streaming of answers allows you to read pieces of text as soon as they arrive from the big metallic brain. And you hopefully won’t run away from the Q&A tool after five seconds, leaving the valuable comment of “This $%£ no good” as feedback.

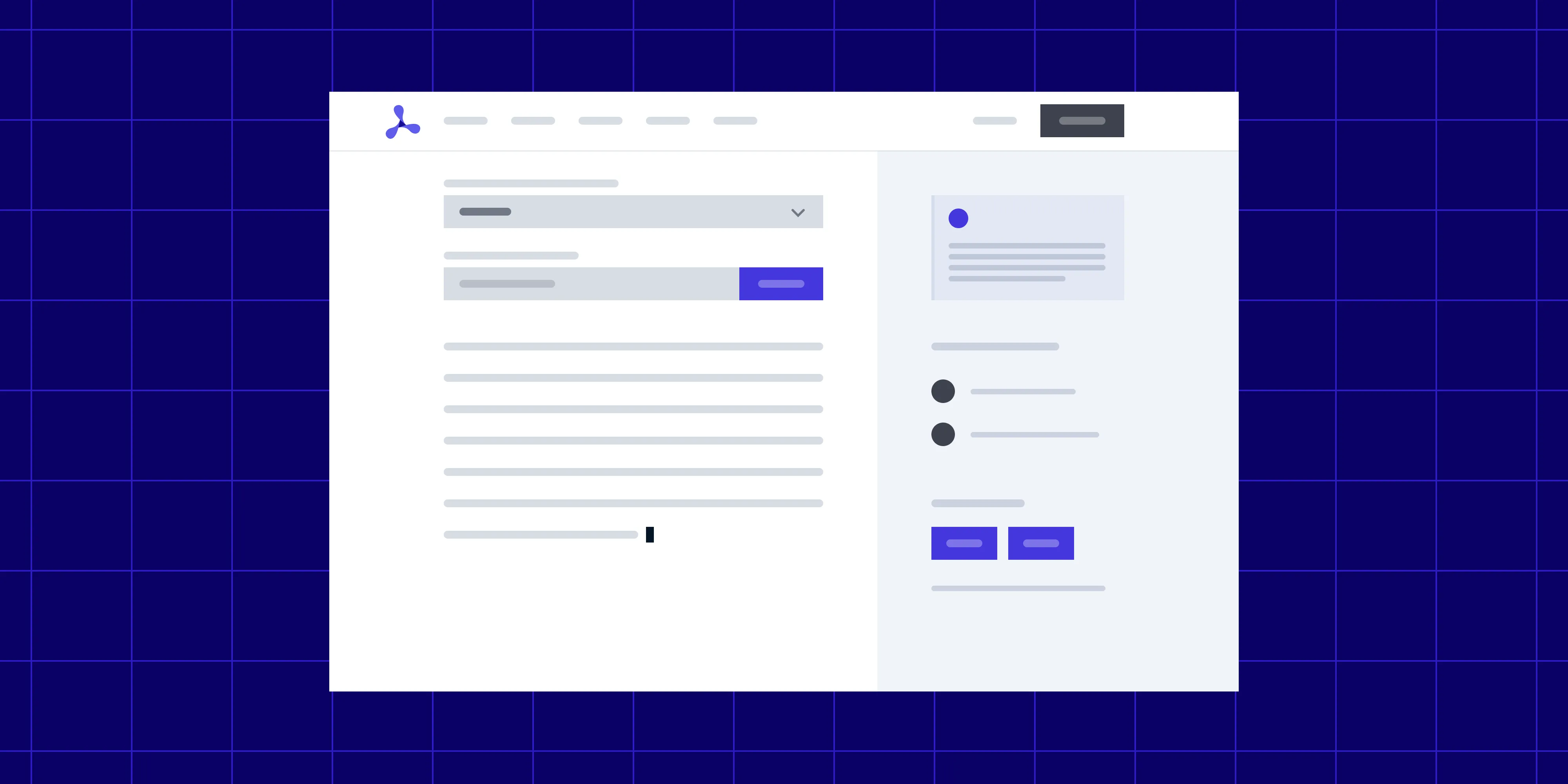

- Streaming answers posed technical difficulties, of course. The most notable was the requirement of an active client-server connection. Our website is static and unequipped for this challenge. The quick-and-dirty interim solution was to load the beta in an iframe where I could do whatever I wanted. Outside the iframe, however, everything became much more complicated to style. This is why you might end up with two scroll bars — one in the iframe and another in the main window. Yup, it’s not a design choice. Rather, it’s a clear limitation of the beta release and my frontend capabilities, both of which I’ll improve upon in the future.

- I’ve done my best to align the styling and the user experience of the tool with the rest of the website. Our wonderful Design team has been most helpful and patient with me during this project. Needless to say, the Design team’s concept was much more beautiful than anything I could hope to implement. As such, the responsibility for any remaining issues and annoyances is all mine.

- I’ve uploaded the entire documentation to the vector database using the retrieval plugin. The source code of our website uses a rather unique combination of React, ERB, partials, and Markdown, so this wasn’t the walk in the park I expected it to be. I ended up with a rather mindless but effective JavaScript script that fetched the sitemap, downloaded each guide from the website in HTML format, parsed the content and filtered out irrelevant parts, converted all this to Markdown, and then uploaded each guide to the vector database. Certainly not a glamorous thing, this script. But it does what I need it to do. And with OpenAI calculating embeddings for the 2,700 pages of the documentation for an eyewatering 50 cents, I have few incentives to improve this any time soon, unfortunately.

- As the uploaded documentation covers all our products, when you asked a question about how to redact PDFs, for example, the tool didn’t know which technology you were using. As explained above, I’m still working on its mind-reading abilities. So the next best solution is to add a dropdown where you can select your technology. It’s one more thing to think about, one more control you need to fidget with, I know. Please let me know if you have better ideas.

So what’s next? I can think about many things to improve for sure. For one, it’d be great to add a proper chat functionality where you can interact with the tool instead of just asking questions. This aspiration is held back by a stateless OpenAI chat completion API where the whole chat history needs to be included in each request. The tool relies on long context and long answers from OpenAI, so including the full history in each request is a no go. There are ways around this, but for now, I just hope that the OpenAI API will soon introduce some stateful behavior and persist chat sessions for a limited amount of time. Another possible improvement is integrating the tool into the website’s internal search rather than loading it in an iframe on a separate page. And there are many, many more ways to take this tool to the next level.

But more importantly, what do you think should be next? Please go to the Ask AI page, try it out, and speak your mind!