String Literals, Character Encodings, and Multiplatform C++

Today’s C++ software needs to support text in multiple languages. If you want to target multiple platforms and compilers, understanding the little details about how string encoding works is crucial to delivering software that is correct, and to writing multilingual tests that are reliable when you run them on multiple platforms.

In this blog post, I’ll take a look at how two of the most popular C++ compilers, Clang and MSVC, encode the bytes in source code files into strings in a running application. This knowledge may seem obscure, but it’s important to understand and be able to reason about character-encoding issues that you may encounter when using string literals that contain text that isn’t in English.

An Introduction to Character Encoding

Strings in C++ programs are ubiquitous, but computers don’t understand the concept of strings. Instead, they represent strings as bytes — that is, just ones and zeroes. Enter the idea of a character encoding (formally, a character-encoding scheme), which is a method that maps between raw bytes and the string they represent. For example, the string "Hello" may have the byte representation “01001000 01100101 01101100 01101100 01101111” in a particular character encoding. There are several character encodings, and some of the most popular ones are:

- UTF-8

- UTF-16

- Latin-1

- JIS

This article only requires basic familiarity with the most popular character encodings described above. If you want additional details about how they actually encode data, see, for example, UTF-8 Everywhere(opens in a new tab).

String Literals in C++

String literals(opens in a new tab) are a programming language feature. This feature serves to represent string values within the source code of a program. C++ supports several types of string literals:

"hello"— represents the string "hello"L"hello"— represents the wide string(opens in a new tab) "hello"u8"hello"— represents the string "hello", encoded in UTF-8u"hello"— represents the string "hello", encoded in UTF-16U"hello"— represents the string "hello", encoded in UTF-32

The next section will explain how the most popular C++ compilers convert the bytes in a source file, which possibly contains string literals, to strings.

Clang

Clang(opens in a new tab) is a popular compiler written as part of the LLVM project. Clang simplifies character encoding handling by only supporting UTF-8. This means that if you have a C++ source file encoded in UTF-16 and want to compile it with Clang, the compiler will emit the following error:

fatal error: UTF-16 (BE) byte order mark detected in 'sample.cpp', but encoding is not supported1 error generated.As Clang always assumes source files are encoded in UTF-8, string literal handling simply involves converting between UTF-8 strings and what’s known as the “execution encoding,” which can be UTF-8, UTF-16, or UTF-32. Which execution encoding is used depends on the character width of the string literal, and that, at the same time, depends on both how the string literal is declared and the platform that is the target of the compilation. For example:

"hello"— the char width is one byteL"hello"— the char width is four bytes on Unix systems and two bytes on Windowsu"hello"— the char width is usually two bytesU"hello"— the char width is usually four bytes

In general, there are only three possible character widths: one byte, two bytes, or four bytes. If the character width is one byte, then Clang simply validates that the literal string is encoded in valid UTF-8. If the character width is two bytes, Clang converts the string literal from UTF-8 to UTF-16. If the character width is four bytes, Clang converts the string literal from UTF-8 to UTF-32.

MSVC

MSVC(opens in a new tab) is the Microsoft compiler included in Visual Studio. The internal handling of string literals and character encodings on Windows is much more complex, because, unlike Clang, MSVC doesn’t assume that every source file is encoded in UTF-8. Unfortunately, we don’t have access to the source code of MSVC to check what it does with literal strings, but the general process is described in this blog post(opens in a new tab). The rest of this section will distill the key details from that blog post.

From the Source File Encoding to UTF-8

Recent versions of MSVC encode strings internally as UTF-8. MSVC follows this process:

- If the source file has a byte order mark(opens in a new tab) (BOM), then MSVC converts the encoding from UTF-16 to UTF-8 (or it leaves it as UTF-8 if it was originally encoded as UTF-8 with a BOM).

- If the source file doesn’t have a BOM, then it tries to detect if the source file is encoded in UTF-16 by looking at the first eight bytes of the file.

- If the source file doesn’t have a BOM and is not encoded in UTF-16, which is the typical case if the file was created in a Unix-like system, then MSVC decodes the source file using the system’s code page(opens in a new tab), and then it encodes the result in UTF-8.

From UTF-8 to the Execution Character Set

As described before in the section on string literals in C++, different string literals in C++ may have different character set representations. MSVC needs to convert between the encoding it uses internally, UTF-8, and the desired character set of the string literal.

This conversion again depends on the system’s code page when the literal string doesn’t have a prefix:

"hello"will be encoded in the system’s code page (generally Windows-1252(opens in a new tab) on English systems)L"hello"will be encoded in UTF-16u"hello"will be encoded in UTF-16U"hello"will be encoded in UTF-32

Visual Studio 2015 Introduced Two Important Flags

As you can see, character encoding is a complex topic in MSVC, as there’s a dependency on the system’s code page and the source file encoding. To try to make things simpler, Microsoft introduced two compiler flags in Visual Studio 2015:

/source-charset— This specifies the character encoding that will be used when decoding a source file./execution-charset— This specifies the character encoding that will be used when encoding data into the execution encoding.

For example, using /source-charset:utf8 decodes the source file as UTF-8, irrespective of the encoding of the source file or the system’s code page. This configuration makes MSVC work like Clang, in that it assumes the source file is encoded in UTF-8. Most source files nowadays are stored in UTF-8, anyway.

Using /execution-charset:utf-8, for example, lets us avoid the problem of depending on the system’s code page when C++ string literals are declared without a prefix. UTF-8 will always be used.

If you simply want both /source-charset:utf-8 and /execution-charset:utf-8, then you can pass the convenient /utf8 compiler flag.

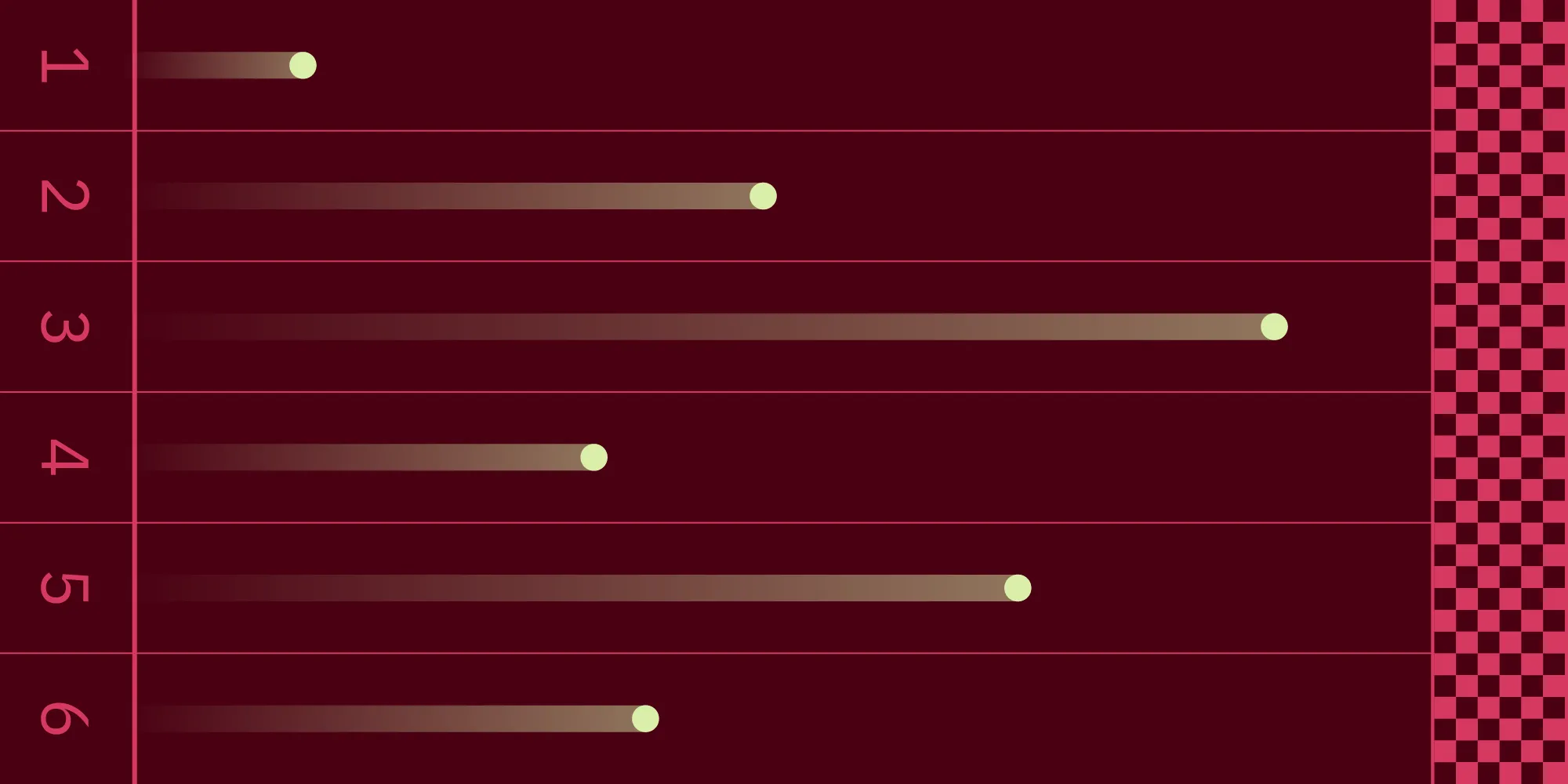

Our Recommendations for Simple Clang and MSVC Interoperability

You can see that the variability in how compilers interpret multibyte strings can be very confusing. We recommend you consider these rules to ensure your multilingual test files are interpreted correctly in both Unix-like and Windows systems:

You can store source files that contain non-English strings in UTF-8 with a BOM. One of the problems with this solution is that storing files in UTF-8 with a BOM isn’t common, and it requires that you explicitly include the BOM when you save the file in your text editor or IDE. Another problem is that it’s possible to remove the BOM by mistake if you process source files using regular expressions. This kind of mistake is easy to miss in a code review because code review tools don’t show things like the BOM in a clear way. We consider saving files in UTF-8 with a BOM a possible, but fragile, approach.

Alternatively, you can pass the

/utf8compiler flag to MSVC. This flag simplifies things because MSVC will assume UTF-8 encoding, just like Clang. The only source of variability will be that the size of a wide char in Unix-like systems is four bytes, but on Windows systems it’s two bytes. If you use wide strings in your source files, you might need to take this into account, depending on your use case.Write string literals explicitly encoded as UTF-8 using the

u8prefix and\uXXXXescape sequences. For example,u8"\u0048\u0065\u006C\u006C\u006Fwould reliably encode the string "Hello" as UTF-8. The tradeoff of this solution is that it’s less readable. However, it may be the best option when the character would otherwise be invisible. For example,u8"\uFEFF"represents the Unicode character “zero-width no-break space,” which is invisible in source files.Avoid wide strings if possible. As mentioned in the second recommendation, the size of a wide character is different depending on the platform. Using

wchar_tand the corresponding string typestd::wstringwill introduce complexity if your code or test code relies implicitly or explicitly on the size of a wide character and needs to run on Unix-like and Windows systems. In some cases, using a wide character string is inevitable, like when interfacing with some Windows APIs.

Conclusion

This blog post explored the little details of character encoding in source files, C++ string literals, and compilers. We hope to have improved your understanding of these tricky concepts. We think that writing good multilingual software often requires a solid grasp of things like character encoding and how popular compilers work.